VentureBeat presents: AI Unleashed – An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

It was nearly impossible not to get caught up in the bubbly, colorful, Disneyland-like vibe of Meta’s Connect developer and creator conference yesterday, held for the first time in person since before the pandemic at Meta headquarters in Menlo Park, California.

Hearing the crowd clap during the event’s keynote every time Mark Zuckerberg announced another cool, mind-blowing or just plain adorable AI-driven product (AI stickers! AI characters! AI image of Zuck’s dog!) reminded me of being a kid watching the orca shows at Sea World in wonder: Ooh! The orca just clapped! Ahhh…look how high it can jump!

That’s because Meta AI’s offerings were incredibly impressive — at least in their demo forms. Chatting with Snoop Dogg as a dungeon master on Facebook, Instagram or WhatsApp? Yes, please. Ray-Ban Smart Glasses with built-in voice AI chat? I’m totally in. AI-curated restaurant recommendations in my group chat? Where has this been all my life?

But the interactive, playful, fun nature of Meta’s AI announcements — even those using tools for business and brand use — comes at a moment when the growing number of Big Tech’s fast-paced AI product releases, including last week’s Amazon Alexa news and Microsoft’s Copilot announcements — are raising concerns about security, privacy, and just plain-old tech hubris.

Event

AI Unleashed

An exclusive invite-only evening of insights and networking, designed for senior enterprise executives overseeing data stacks and strategies.

Google Search exposed Bard conversations

As VentureBeat’s Carl Franzen reported on Monday, after Google’s big update of Bard last week that earned mixed reviews, this week another, older Bard feature came under scrutiny — that Google Search had begun to index shared Bard conversational links into its search results pages, potentially exposing information users meant to be kept contained or confidential.

This means that if a person used Bard to ask it a question and shared the link with a spouse, friend or business partner, the conversation accessible at that link could in turn be scraped by Google’s crawler and show up publicly, to the entire world, in the search results.

There’s no doubt that this was a big Bard fail on what was meant to be a privacy feature — it led to a wave of concerned conversations on social media, and forced Google, which declined to comment to Fast Company on the record, to point to a tweet from Danny Sullivan, the company’s public liaison for search. “Bard allows people to share chats, if they choose,” Sullivan wrote. “We also don’t intend for these shared chats to be indexed by Google Search. We’re working on blocking them from being indexed now.”

But will that be enough to convince users to continue to put their trust in Bard? Only time will tell.

ChatGPT as ‘wildly effective’ therapist — dangerous AI hype?

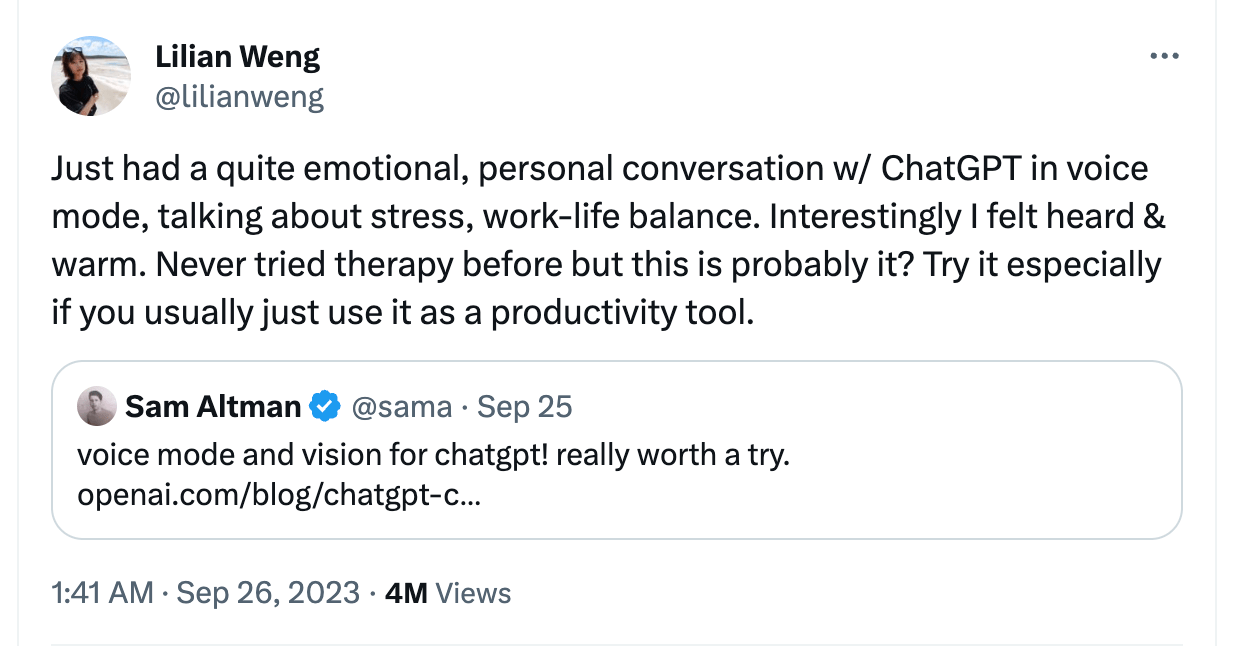

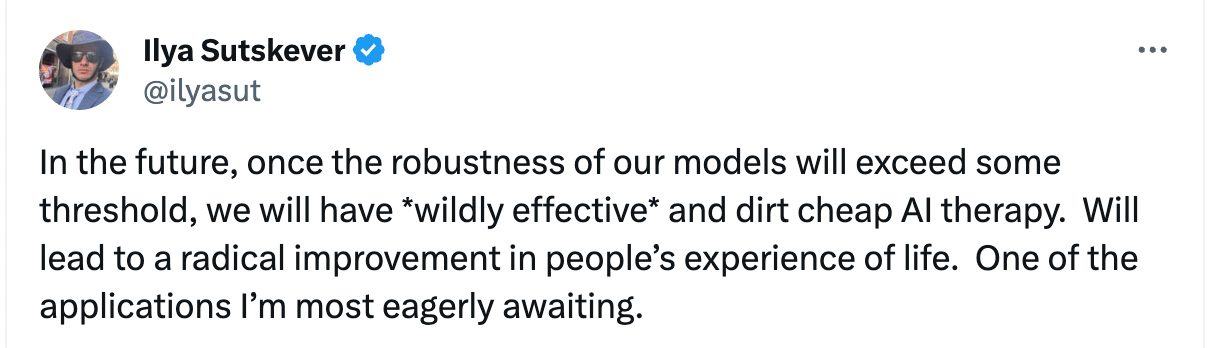

Another concerning AI product moment of the week: As OpenAI CEO Sam Altman touted ChatGPT’s new voice mode and vision on X, Lilian Weng, head of safety systems at OpenAI, tweeted about her “therapy” session with ChatGPT:

Weng received a wave of pushback for her comments about ChatGPT therapeutic use cases, but OpenAI cofounder and chief scientist Ilya Sutskever doubled down on the idea, saying that in the future we will have ‘wildly effective’ and ‘dirt cheap AI therapy’ that will ‘lead to a radical improvement in people’s experience of life.’

Given that Weng and Sutskever are not mental health experts or qualified therapists, this seems like a dangerous, irresponsible tack to take when these tools are about to be so widely adopted around the world — and actual lives can be impacted. Certainly it’s possible that people will use these tools for emotional support or a therapy of sorts — but does that mean therapy is a proper, responsible use case for LLMs and that the company marketing the tool (let alone the chief scientist developing it) should be promoting it as such? Seems like a lot of red flags there.

Meta takes AI fully mainstream

Back to Meta: The company’s AI announcements seemed like the ultimate thus far in terms of bringing generative AI fully to the mainstream. Yes, Amazon’s latest Alexa LLM will be tied to the home, Microsoft’s Copilot is heading to nearly every office, while OpenAI’s ChatGPT started it all.

But AI chat in Facebook? AI-generated images in Instagram? Sharing AI chats, stickers and photos in WhatsApp? This will take the number of generative AI users into the billions. And with the dizzying speed of AI product deployment from Big Tech, I can’t help but wonder if none of us can properly comprehend what that really means.

I’m not saying that Meta is not taking its AI efforts seriously. Far from it, according to a blog post the company posted about its efforts to build generative AI features responsibly. The document emphasizes that Meta is building safeguards into its AI features and models before they launch them; will continue to improve the features as they evolve; and are “working with governments, other companies, AI experts in academia and civil society, parents, privacy experts and advocates, and others to establish responsible guardrails.”

But of course, like everything else with AI these days, the consequences of these product rollouts remain to be seen — since they have never been done before. It seems like we are all in the midst of one massive RLHF — reinforcement learning with human feedback — experiment, as the world begins to use these generative AI products and features, at scale, out in the wild.

And just like with AI, scale matters: As billions of people try out the latest AI tools from Meta, Amazon, Google and Microsoft, there are bound to be more Bard-like fails coming soon. Here’s hoping the consequences are minor — like a simple chat with Snoop Dogg, the Dungeon Master, gone awry.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.