OpenAI held its first developer event on Monday and it was action-packed. The company launched improved models to new APIs. Here is a summary of all announcements in case you missed the event.

After a year of its launch, ChatGPT is pretty popular. OpenAI said that more than 100 million people are using the tool weekly. ChatGPT was one of the fastest consumer products to hit the 100 million monthly user mark, just a few months after its launch.

The company also noted that more than 2 million developers are building solutions through its API.

The AI company unveiled the GPT-4 Turbo — an improved version of the popular GPT-4 model — at its developer event. Open AI said the new Turbo model comes in two versions: one for just text analysis and the other for understanding both text and images. The text-only model is priced at $0.01/1,000 input tokens and $0.03/1,000 output tokens. GPT-4 Turbo will cost $0.00765 for processing a 1080 x 1080 pixels image.

GPT-4 Turbo has a context window of 128,000 tokens or roughly 100,000 words, which is four times more than GPT-4’s context window. The new model has a knowledge cut-off of April 2023, compared to GPT-4’s September 2021 cutoff.

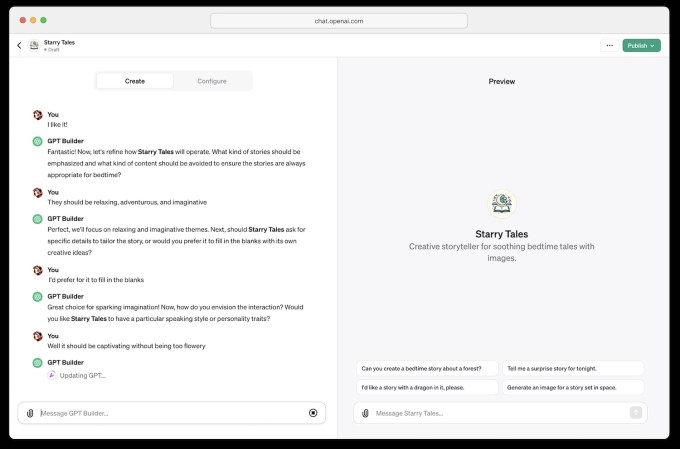

OpenAI announced that it will let users create their own versions of GPT for fun or productivity use cases. Users will be able to build these bots just through prompt without needing to know any coding. The company will also let enterprise customers make internal-only GPTs built on top of the company’s knowledge base.

The company said developers can connect GPTs to databases or knowledge bases like emails to import external information.

Image Credits: OpenAI GPT Store

OpenAI said that it plans to let users publish these GPTs to a store — coming later this month — as well. It will initially have creations from “verified builders.” The company’s CEO Sam Altman also talked about paying people with popular GPTs.

Image Credits: OpenAI GPT Store

Notably, Quora’s Poe platform, which also lets users build bots through prompts, opened up a creator payment program last week.

Example GPTs will be available to ChatGPT Plus and ChatGPT Enterprise customers from today.

At its first developer day event, OpenAI launched a new Assistants API, to let developers build their own “agent-like experiences.” Developers can make agents that retrieve outside knowledge or call programming functions for a specific action. The use cases range include an assistant for coding to an AI-powered vacation planner.

Read more about OpenAI’s new API to devs build assistants

OpenAI’s text-to-image model, DALL-E 3 is now available through API with in-built moderation tools. The output regulations range from 1024×1024 to 1792×1024 in different formats. Open AI has priced the model at $0.04 per generated image.

Read more about the DALL-E 3 API.

The company launched a new text-to-speech API called Audio API with six preset voices: Alloy, Echo, Fable, Onyx, Nova, and Shimer. The API provides access to two generative AI models and is priced at $0.015 per 1,000 input characters.

Read more about new text-to-speech APIs

OpenAI announced a new program called Copyright Shield at its developer conference, promising to protect businesses using the AI company’s products from copyright claims. The company said it will pay legal fees if customers using “generally available” OpenAI’s developer platform and ChatGPT Enterprise face IP lawsuits against content created by OpenAI’s tools.

Recently, companies like Mircosoft, Google-backed Cohere, Amazon, and IBM have announced that they’ll indemnify customers against IP infringement claims.

Read more about OpenAI’s Copyright Shield program.

Other announcement from the event

There were also some other notable announcements from the event:

- OpenAI will start a program to let companies build their customer models with help from the AI company’s researchers.

- The model picker in ChatGPT is going away to make things easier and just let people use the tool without having to worry about which model to pick.

- The company launched the next version of its open-sourced speech recognition model called Whisper large v3.

- The company is doubling the tokens per limit rate for all paying GPT-4 customers.