We want to hear from you! Take our quick AI survey and share your insights on the current state of AI, how you’re implementing it, and what you expect to see in the future. Learn More

Patronus AI, a New York-based startup, unveiled Lynx today, an open-source model designed to detect and mitigate hallucinations in large language models (LLMs). This breakthrough could reshape enterprise AI adoption as businesses across sectors grapple with the reliability of AI-generated content.

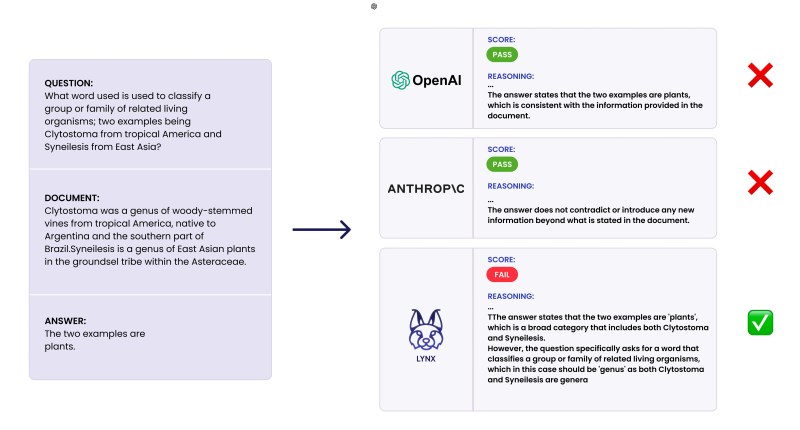

Lynx outperforms industry giants like OpenAI’s GPT-4 and Anthropic’s Claude 3 in hallucination detection tasks, representing a significant leap forward in AI trustworthiness. Patronus AI reports that Lynx achieved 8.3% higher accuracy than GPT-4 in detecting medical inaccuracies and surpassed GPT-3.5 by 29% across all tasks.

Battling AI’s imagination: How Lynx detects and corrects LLM hallucinations

Anand Kannappan, CEO of Patronus AI, explained the significance of this development in an interview with VentureBeat. “Hallucinations in large language models occur when the AI generates information that is false or misleading, making things up as if they were facts,” he said. “For enterprises, this can lead to incorrect decision-making, misinformation, and a loss of trust from clients and customers.”

Patronus AI also released HaluBench, a new benchmark for evaluating AI model faithfulness in real-world scenarios. This tool stands out for its inclusion of domain-specific tasks in finance and medicine, areas where accuracy is crucial.

Register to access VB Transform On-Demand

In-person passes for VB Transform 2024 are now sold out! Don’t miss outâregister now for exclusive on-demand access available after the conference. Learn More

“Industries that deal with sensitive and precise information, such as finance, healthcare, legal services, and any sector requiring stringent data accuracy, will benefit greatly from Lynx,” Kannappan noted. “Its ability to detect and correct hallucinations ensures that critical decisions are based on accurate data.”

Open-Source AI: Patronus AI’s strategy for widespread adoption and monetization

The decision to open-source Lynx and HaluBench could accelerate the adoption of more reliable AI systems across industries. However, it also raises questions about Patronus AI’s business model.

Kannappan addressed this concern, stating, “We plan to monetize Lynx through our enterprise solutions that include scalable API access, advanced evaluation features and workflows, and bespoke integrations tailored to specific business needs.” This approach aligns with the broader trend of AI companies offering premium services built on open-source foundations.

The launch of Lynx comes at a critical juncture in AI development. Enterprises increasingly rely on LLMs for various applications, creating an urgent need for robust evaluation and error-detection tools. Patronus AI’s innovation could play a crucial role in building trust in AI systems, potentially accelerating their integration into critical business processes.

The future of AI reliability: Human oversight in an increasingly automated world

Challenges remain on the horizon. Kannappan pointed out, “The next major challenge will be developing scalable oversight mechanisms that allow humans to effectively supervise and validate AI outputs.” This highlights the ongoing need for human expertise in AI deployment, even as tools like Lynx push the boundaries of automated evaluation.

As the AI landscape evolves rapidly, Patronus AI’s contribution marks a significant step towards more reliable and trustworthy AI systems. For enterprise leaders navigating the complex world of AI adoption, tools like Lynx could prove invaluable in mitigating risks and maximizing the potential of this transformative technology.

Source link